1.3.2.1. Terraform 基础概念——状态管理

我们在第一章的末尾提过,当我们成功地执行了一次 terraform apply,创建了期望的基础设施以后,我们如果再次执行 terraform apply,生成的新的执行计划将不会包含任何变更,Terraform 会记住当前基础设施的状态,并将之与代码所描述的期望状态进行比对。第二次 apply 时,因为当前状态已经与代码描述的状态一致了,所以会生成一个空的执行计划。

1.3.2.1.1. 初探状态文件

在这里,Terraform 引入了一个独特的概念——状态管理,这是 Ansible 等配置管理工具或是自研工具调用 SDK 操作基础设施的方案所没有的。简单来说,Terraform 将每次执行基础设施变更操作时的状态信息保存在一个状态文件中,默认情况下会保存在当前工作目录下的 terraform.tfstate 文件里。例如我们之前在使用 LocalStack 模拟环境的代码中声明一个 data 和一个 resource:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~>5.0"

}

}

}

provider "aws" {

access_key = "test"

secret_key = "test"

region = "us-east-1"

s3_use_path_style = false

skip_credentials_validation = true

skip_metadata_api_check = true

skip_requesting_account_id = true

endpoints {

apigateway = "http://localhost:4566"

apigatewayv2 = "http://localhost:4566"

cloudformation = "http://localhost:4566"

cloudwatch = "http://localhost:4566"

dynamodb = "http://localhost:4566"

ec2 = "http://localhost:4566"

es = "http://localhost:4566"

elasticache = "http://localhost:4566"

firehose = "http://localhost:4566"

iam = "http://localhost:4566"

kinesis = "http://localhost:4566"

lambda = "http://localhost:4566"

rds = "http://localhost:4566"

redshift = "http://localhost:4566"

route53 = "http://localhost:4566"

s3 = "http://s3.localhost.localstack.cloud:4566"

secretsmanager = "http://localhost:4566"

ses = "http://localhost:4566"

sns = "http://localhost:4566"

sqs = "http://localhost:4566"

ssm = "http://localhost:4566"

stepfunctions = "http://localhost:4566"

sts = "http://localhost:4566"

}

}

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-trusty-14.04-amd64-server-20170727"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["099720109477"] # Canonical

}

resource "aws_instance" "web" {

ami = data.aws_ami.ubuntu.id

instance_type = "t3.micro"

tags = {

Name = "HelloWorld"

}

}

使用 terraform apply 后,我们可以看到 terraform.tfstate 的内容:

{

"version": 4,

"terraform_version": "1.7.3",

"serial": 1,

"lineage": "159018e2-63f4-2dfa-ce0d-873a37a1e0a7",

"outputs": {},

"resources": [

{

"mode": "data",

"type": "aws_ami",

"name": "ubuntu",

"provider": "provider[\"registry.terraform.io/hashicorp/aws\"]",

"instances": [

{

"schema_version": 0,

"attributes": {

"architecture": "x86_64",

"arn": "arn:aws:ec2:us-east-1::image/ami-1e749f67",

"block_device_mappings": [

{

"device_name": "/dev/sda1",

"ebs": {

"delete_on_termination": "false",

"encrypted": "false",

"iops": "0",

"snapshot_id": "snap-15bd5527",

"throughput": "0",

"volume_size": "15",

"volume_type": "standard"

},

"no_device": "",

"virtual_name": ""

}

],

"boot_mode": "",

"creation_date": "2024-02-20T13:52:42.000Z",

"deprecation_time": "",

"description": "Canonical, Ubuntu, 14.04 LTS, amd64 trusty image build on 2017-07-27",

"ena_support": false,

"executable_users": null,

"filter": [

{

"name": "name",

"values": [

"ubuntu/images/hvm-ssd/ubuntu-trusty-14.04-amd64-server-20170727"

]

},

{

"name": "virtualization-type",

"values": [

"hvm"

]

}

],

"hypervisor": "xen",

"id": "ami-1e749f67",

"image_id": "ami-1e749f67",

"image_location": "amazon/getting-started",

"image_owner_alias": "amazon",

"image_type": "machine",

"imds_support": "",

"include_deprecated": false,

"kernel_id": "None",

"most_recent": true,

"name": "ubuntu/images/hvm-ssd/ubuntu-trusty-14.04-amd64-server-20170727",

"name_regex": null,

"owner_id": "099720109477",

"owners": [

"099720109477"

],

"platform": "",

"platform_details": "",

"product_codes": [],

"public": true,

"ramdisk_id": "ari-1a2b3c4d",

"root_device_name": "/dev/sda1",

"root_device_type": "ebs",

"root_snapshot_id": "snap-15bd5527",

"sriov_net_support": "",

"state": "available",

"state_reason": {

"code": "UNSET",

"message": "UNSET"

},

"tags": {},

"timeouts": null,

"tpm_support": "",

"usage_operation": "",

"virtualization_type": "hvm"

},

"sensitive_attributes": []

}

]

},

{

"mode": "managed",

"type": "aws_instance",

"name": "web",

"provider": "provider[\"registry.terraform.io/hashicorp/aws\"]",

"instances": [

{

"schema_version": 1,

"attributes": {

"ami": "ami-1e749f67",

"arn": "arn:aws:ec2:us-east-1::instance/i-288a34165ed2ad2f7",

"associate_public_ip_address": true,

"availability_zone": "us-east-1a",

"capacity_reservation_specification": [],

"cpu_core_count": null,

"cpu_options": [],

"cpu_threads_per_core": null,

"credit_specification": [],

"disable_api_stop": false,

"disable_api_termination": false,

"ebs_block_device": [],

"ebs_optimized": false,

"enclave_options": [],

"ephemeral_block_device": [],

"get_password_data": false,

"hibernation": false,

"host_id": "",

"host_resource_group_arn": null,

"iam_instance_profile": "",

"id": "i-288a34165ed2ad2f7",

"instance_initiated_shutdown_behavior": "stop",

"instance_lifecycle": "",

"instance_market_options": [],

"instance_state": "running",

"instance_type": "t3.micro",

"ipv6_address_count": 0,

"ipv6_addresses": [],

"key_name": "",

"launch_template": [],

"maintenance_options": [],

"metadata_options": [],

"monitoring": false,

"network_interface": [],

"outpost_arn": "",

"password_data": "",

"placement_group": "",

"placement_partition_number": 0,

"primary_network_interface_id": "eni-68899bf6",

"private_dns": "ip-10-13-239-41.ec2.internal",

"private_dns_name_options": [],

"private_ip": "10.13.239.41",

"public_dns": "ec2-54-214-132-221.compute-1.amazonaws.com",

"public_ip": "54.214.132.221",

"root_block_device": [

{

"delete_on_termination": true,

"device_name": "/dev/sda1",

"encrypted": false,

"iops": 0,

"kms_key_id": "",

"tags": {},

"throughput": 0,

"volume_id": "vol-6dde834f",

"volume_size": 8,

"volume_type": "gp2"

}

],

"secondary_private_ips": [],

"security_groups": [],

"source_dest_check": true,

"spot_instance_request_id": "",

"subnet_id": "subnet-dbb4c2f9",

"tags": {

"Name": "HelloWorld"

},

"tags_all": {

"Name": "HelloWorld"

},

"tenancy": "default",

"timeouts": null,

"user_data": null,

"user_data_base64": null,

"user_data_replace_on_change": false,

"volume_tags": null,

"vpc_security_group_ids": []

},

"sensitive_attributes": [],

"private": "eyJlMmJmYjczMC1lY2FhLTExZTYtOGY4OC0zNDM2M2JjN2M0YzAiOnsiY3JlYXRlIjo2MDAwMDAwMDAwMDAsImRlbGV0ZSI6MTIwMDAwMDAwMDAwMCwidXBkYXRlIjo2MDAwMDAwMDAwMDB9LCJzY2hlbWFfdmVyc2lvbiI6IjEifQ==",

"dependencies": [

"data.aws_ami.ubuntu"

]

}

]

}

],

"check_results": null

}

我们可以看到,查询到的 data 以及创建的 resource 信息都被以 json 格式保存在 tfstate 文件里。

我们前面已经说过,由于 tfstate 文件的存在,我们在 terraform apply 之后立即再次 apply 是不会执行任何变更的,那么如果我们删除了这个 tfstate 文件,然后再执行 apply 会发生什么呢?Terraform 读取不到 tfstate 文件,会认为这是我们第一次创建这组资源,所以它会再一次创建代码中描述的所有资源。更加麻烦的是,由于我们前一次创建的资源所对应的状态信息被我们删除了,所以我们再也无法通过执行 terraform destroy 来销毁和回收这些资源,实际上产生了资源泄漏。所以妥善保存这个状态文件是非常重要的。

另外,如果我们对 Terraform 的代码进行了一些修改,导致生成的执行计划将会改变状态,那么在实际执行变更之前,Terraform 会复制一份当前的 tfstate 文件到同路径下的 terraform.tfstate.backup 中,以防止由于各种意外导致的 tfstate 损毁。

在 Terraform 发展的极早期,HashiCorp 曾经尝试过无状态文件的方案,也就是在执行 Terraform 变更计划时,给所有涉及到的资源都打上特定的 tag,在下次执行变更时,先通过 tag 读取相关资源来重建状态信息。但因为并不是所有资源都支持打 tag,也不是所有公有云都支持多 tag,所以 Terraform 最终决定用状态文件方案。

还有一点,HashiCorp 官方从未公开过 tfstate 的格式,也就是说,HashiCorp 保留随时修改 tfstate 格式的权力。所以不要试图手动或是用自研代码去修改 tfstate,Terraform 命令行工具提供了相关的指令(我们后续会介绍到),请确保只通过命令行的指令操作状态文件。

1.3.2.1.2. 极其重要的安全警示—— tfstate 是明文的

关于 Terraform 状态,还有极其重要的事,所有考虑在生产环境使用 Terraform 的人都必须格外小心并再三警惕:Terraform 的状态文件是明文的,这就意味着代码中所使用的一切机密信息都将以明文的形式保存在状态文件里。例如我们创建一个私钥文件:

resource "tls_private_key" "example" {

algorithm = "RSA"

}

执行了terraform apply后我们观察 tfstate 文件中相关段落:

{

"version": 4,

"terraform_version": "1.7.3",

"serial": 1,

"lineage": "dec42d6b-d61f-30b3-0b83-d5d8881c29ea",

"outputs": {},

"resources": [

{

"mode": "managed",

"type": "tls_private_key",

"name": "example",

"provider": "provider[\"registry.terraform.io/hashicorp/tls\"]",

"instances": [

{

"schema_version": 1,

"attributes": {

"algorithm": "RSA",

"ecdsa_curve": "P224",

"id": "d47d1465586d25322bb8ca16029fe4fb2ec001e0",

"private_key_openssh": "-----BEGIN OPENSSH PRIVATE KEY-----\nb3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABFwAAAAdz\nc2gtcnNhAAAAAwEAAQAAAQEAsdD9sJ/L5m8cRB19V20HA9BIqZwnT3id5JoMNUuW\nYn4sJTWHa/JHkQ5akrWH50aIdzQrQneIZXJyx1OMlqKbVxAc2+u/8qd2m2GrsWKZ\neqQcpNT7v76QowDcvdggad3Tezn3XU/eBukVj9i+lnN1ofyQzVEQAdvW8TpRdUL5\nb/bIsz1RzGWzUrWD8XTFLf2RuvvzhgBViuuWI0ns3WQMpa6Dcu+nWfGLCl26Wlph\nNmoUAv8wCay7KoynG58pJW+uqYA7lTx4tNMLhIW7rM4roYbXctkCi03PcW3x25O8\nyKSzYIi5xH7CQ7ggwXzcx4r06NXkaE9/LHuBSFJcIMvH8QAAA7jnMVXy5zFV8gAA\nAAdzc2gtcnNhAAABAQCx0P2wn8vmbxxEHX1XbQcD0EipnCdPeJ3kmgw1S5Zifiwl\nNYdr8keRDlqStYfnRoh3NCtCd4hlcnLHU4yWoptXEBzb67/yp3abYauxYpl6pByk\n1Pu/vpCjANy92CBp3dN7OfddT94G6RWP2L6Wc3Wh/JDNURAB29bxOlF1Qvlv9siz\nPVHMZbNStYPxdMUt/ZG6+/OGAFWK65YjSezdZAylroNy76dZ8YsKXbpaWmE2ahQC\n/zAJrLsqjKcbnyklb66pgDuVPHi00wuEhbusziuhhtdy2QKLTc9xbfHbk7zIpLNg\niLnEfsJDuCDBfNzHivTo1eRoT38se4FIUlwgy8fxAAAAAwEAAQAAAQEAleLv5ZFd\nY9mm/vfIrwg1UI6ioW4CaOfoWElOHyKfGlj2x0qu41wv3WM3D9G7REVdRPYRvQ5b\nSABIJiMUL+nTfXkUioDXpShqPyH+gyD09L8fcgYiS4fMDcrtR43GDNcyq/25uMtZ\nAYQ6a62tQc8Dik8GlDtPffGc5mxdO7X/4tLAObBPqO+lvGX2K/2hV2ql/a4fBVXR\nOMPc9A0eva2exifZyFo9vT9CCcW4iNY2BHY2hXAPI1gFpBnmnY2twFof4EvX6tfZ\nGjt20QCqTi41P8Obrfqi108zRAKtjJFeezNY+diVvxZaCDb/7ceFbFUrXq9u2UVD\nExn9joOLTJTEwQAAAIEAgOQ/mjRousgSenXW2nE2aq0m7oKQzhsF/8k5UPj6mym1\nvwUyC2gglTIOGVkUpj91L/Fh2nCuX5BLyzzIee0twRvT1Kj11BU6UoElStpR/JEC\n7trKWJrBddphBWHAuVcU5AQQPwI/9sg27q/9y16WTIQAJzx8GwGcDbgZj8/LbB4A\nAACBAMpVcX+2smqt8T9mbwU7e7ZaCQM0c3/7F2S2Z26Zl16k+8WPr6+CyJd3d2s2\nQkrmqVKJzDqPYidU0EmaNOrqytvUTUDK9KJgKsJuNC9ZbODqTCMA03ntr+hVcfdt\nd9F5fxyBJSrzpAhUQKadHs8jtAa1ENAhPmwKzvcJ51Gp4R3ZAAAAgQDg+s4nk926\njuLQGlweOi2HY6eeMQF8MOnXEhM8P7ErdRR73ql7GlhBNoGzuI6YTaqjLXBGNetj\nm4iziPLapbqBXSeia3y1JU71e1M+J342CxQwZRKTI9G/AB2AzspB4VfjAT9ZYJM1\nSFH7cDTejcrkfWko8TqyRLwtTPDa7Xlz2QAAAAAB\n-----END OPENSSH PRIVATE KEY-----\n",

"private_key_pem": "-----BEGIN RSA PRIVATE KEY-----\nMIIEpAIBAAKCAQEAsdD9sJ/L5m8cRB19V20HA9BIqZwnT3id5JoMNUuWYn4sJTWH\na/JHkQ5akrWH50aIdzQrQneIZXJyx1OMlqKbVxAc2+u/8qd2m2GrsWKZeqQcpNT7\nv76QowDcvdggad3Tezn3XU/eBukVj9i+lnN1ofyQzVEQAdvW8TpRdUL5b/bIsz1R\nzGWzUrWD8XTFLf2RuvvzhgBViuuWI0ns3WQMpa6Dcu+nWfGLCl26WlphNmoUAv8w\nCay7KoynG58pJW+uqYA7lTx4tNMLhIW7rM4roYbXctkCi03PcW3x25O8yKSzYIi5\nxH7CQ7ggwXzcx4r06NXkaE9/LHuBSFJcIMvH8QIDAQABAoIBAQCV4u/lkV1j2ab+\n98ivCDVQjqKhbgJo5+hYSU4fIp8aWPbHSq7jXC/dYzcP0btERV1E9hG9DltIAEgm\nIxQv6dN9eRSKgNelKGo/If6DIPT0vx9yBiJLh8wNyu1HjcYM1zKr/bm4y1kBhDpr\nra1BzwOKTwaUO0998ZzmbF07tf/i0sA5sE+o76W8ZfYr/aFXaqX9rh8FVdE4w9z0\nDR69rZ7GJ9nIWj29P0IJxbiI1jYEdjaFcA8jWAWkGeadja3AWh/gS9fq19kaO3bR\nAKpOLjU/w5ut+qLXTzNEAq2MkV57M1j52JW/FloINv/tx4VsVSter27ZRUMTGf2O\ng4tMlMTBAoGBAMpVcX+2smqt8T9mbwU7e7ZaCQM0c3/7F2S2Z26Zl16k+8WPr6+C\nyJd3d2s2QkrmqVKJzDqPYidU0EmaNOrqytvUTUDK9KJgKsJuNC9ZbODqTCMA03nt\nr+hVcfdtd9F5fxyBJSrzpAhUQKadHs8jtAa1ENAhPmwKzvcJ51Gp4R3ZAoGBAOD6\nzieT3bqO4tAaXB46LYdjp54xAXww6dcSEzw/sSt1FHveqXsaWEE2gbO4jphNqqMt\ncEY162ObiLOI8tqluoFdJ6JrfLUlTvV7Uz4nfjYLFDBlEpMj0b8AHYDOykHhV+MB\nP1lgkzVIUftwNN6NyuR9aSjxOrJEvC1M8NrteXPZAoGAIQf/5nSh/e51ov8LAtSq\nJqPeMsq+TFdmg0eP7Stf3dCbVa5WZRW5v5h+Q19xRR8Q52udjrXXtUoQUuO83dkE\n0wx+rCQ1+cgvUtyA4nX741/8m/5Hh/E4tXo1h8o0NFtcV//xXGi4D7AJeenOnMxc\nWHf4zbGPqj29efEA9YEBQkkCgYABhG+DgNHMAk6xTJw2b/oCob9tp7L03XeWRb7v\ndxaAzodW1oeaFvFlbzKsvZ/okw2FkDbjolV2FIR1gYTxyJBbcv9jbwomRpwjt7M2\nBhopzyVRtjzL1UAC48NPLRXcH+Lx2v5MYgRcJaK36WfR4G7v35CoAAh/T0tdmtk9\nAMEC8QKBgQCA5D+aNGi6yBJ6ddbacTZqrSbugpDOGwX/yTlQ+PqbKbW/BTILaCCV\nMg4ZWRSmP3Uv8WHacK5fkEvLPMh57S3BG9PUqPXUFTpSgSVK2lH8kQLu2spYmsF1\n2mEFYcC5VxTkBBA/Aj/2yDbur/3LXpZMhAAnPHwbAZwNuBmPz8tsHg==\n-----END RSA PRIVATE KEY-----\n",

"private_key_pem_pkcs8": "-----BEGIN PRIVATE KEY-----\nMIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQCx0P2wn8vmbxxE\nHX1XbQcD0EipnCdPeJ3kmgw1S5ZifiwlNYdr8keRDlqStYfnRoh3NCtCd4hlcnLH\nU4yWoptXEBzb67/yp3abYauxYpl6pByk1Pu/vpCjANy92CBp3dN7OfddT94G6RWP\n2L6Wc3Wh/JDNURAB29bxOlF1Qvlv9sizPVHMZbNStYPxdMUt/ZG6+/OGAFWK65Yj\nSezdZAylroNy76dZ8YsKXbpaWmE2ahQC/zAJrLsqjKcbnyklb66pgDuVPHi00wuE\nhbusziuhhtdy2QKLTc9xbfHbk7zIpLNgiLnEfsJDuCDBfNzHivTo1eRoT38se4FI\nUlwgy8fxAgMBAAECggEBAJXi7+WRXWPZpv73yK8INVCOoqFuAmjn6FhJTh8inxpY\n9sdKruNcL91jNw/Ru0RFXUT2Eb0OW0gASCYjFC/p0315FIqA16Uoaj8h/oMg9PS/\nH3IGIkuHzA3K7UeNxgzXMqv9ubjLWQGEOmutrUHPA4pPBpQ7T33xnOZsXTu1/+LS\nwDmwT6jvpbxl9iv9oVdqpf2uHwVV0TjD3PQNHr2tnsYn2chaPb0/QgnFuIjWNgR2\nNoVwDyNYBaQZ5p2NrcBaH+BL1+rX2Ro7dtEAqk4uNT/Dm636otdPM0QCrYyRXnsz\nWPnYlb8WWgg2/+3HhWxVK16vbtlFQxMZ/Y6Di0yUxMECgYEAylVxf7ayaq3xP2Zv\nBTt7tloJAzRzf/sXZLZnbpmXXqT7xY+vr4LIl3d3azZCSuapUonMOo9iJ1TQSZo0\n6urK29RNQMr0omAqwm40L1ls4OpMIwDTee2v6FVx92130Xl/HIElKvOkCFRApp0e\nzyO0BrUQ0CE+bArO9wnnUanhHdkCgYEA4PrOJ5Pduo7i0BpcHjoth2OnnjEBfDDp\n1xITPD+xK3UUe96pexpYQTaBs7iOmE2qoy1wRjXrY5uIs4jy2qW6gV0nomt8tSVO\n9XtTPid+NgsUMGUSkyPRvwAdgM7KQeFX4wE/WWCTNUhR+3A03o3K5H1pKPE6skS8\nLUzw2u15c9kCgYAhB//mdKH97nWi/wsC1Komo94yyr5MV2aDR4/tK1/d0JtVrlZl\nFbm/mH5DX3FFHxDna52Otde1ShBS47zd2QTTDH6sJDX5yC9S3IDidfvjX/yb/keH\n8Ti1ejWHyjQ0W1xX//FcaLgPsAl56c6czFxYd/jNsY+qPb158QD1gQFCSQKBgAGE\nb4OA0cwCTrFMnDZv+gKhv22nsvTdd5ZFvu93FoDOh1bWh5oW8WVvMqy9n+iTDYWQ\nNuOiVXYUhHWBhPHIkFty/2NvCiZGnCO3szYGGinPJVG2PMvVQALjw08tFdwf4vHa\n/kxiBFwlorfpZ9Hgbu/fkKgACH9PS12a2T0AwQLxAoGBAIDkP5o0aLrIEnp11tpx\nNmqtJu6CkM4bBf/JOVD4+psptb8FMgtoIJUyDhlZFKY/dS/xYdpwrl+QS8s8yHnt\nLcEb09So9dQVOlKBJUraUfyRAu7ayliawXXaYQVhwLlXFOQEED8CP/bINu6v/cte\nlkyEACc8fBsBnA24GY/Py2we\n-----END PRIVATE KEY-----\n",

"public_key_fingerprint_md5": "a9:cc:2d:0f:66:b9:a9:89:94:f1:da:8f:93:df:63:d5",

"public_key_fingerprint_sha256": "SHA256:ias4wauIC4J/zd/cVoXT6UUSUZLQXaxdpJfkGAfRDz0",

"public_key_openssh": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCx0P2wn8vmbxxEHX1XbQcD0EipnCdPeJ3kmgw1S5ZifiwlNYdr8keRDlqStYfnRoh3NCtCd4hlcnLHU4yWoptXEBzb67/yp3abYauxYpl6pByk1Pu/vpCjANy92CBp3dN7OfddT94G6RWP2L6Wc3Wh/JDNURAB29bxOlF1Qvlv9sizPVHMZbNStYPxdMUt/ZG6+/OGAFWK65YjSezdZAylroNy76dZ8YsKXbpaWmE2ahQC/zAJrLsqjKcbnyklb66pgDuVPHi00wuEhbusziuhhtdy2QKLTc9xbfHbk7zIpLNgiLnEfsJDuCDBfNzHivTo1eRoT38se4FIUlwgy8fx\n",

"public_key_pem": "-----BEGIN PUBLIC KEY-----\nMIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAsdD9sJ/L5m8cRB19V20H\nA9BIqZwnT3id5JoMNUuWYn4sJTWHa/JHkQ5akrWH50aIdzQrQneIZXJyx1OMlqKb\nVxAc2+u/8qd2m2GrsWKZeqQcpNT7v76QowDcvdggad3Tezn3XU/eBukVj9i+lnN1\nofyQzVEQAdvW8TpRdUL5b/bIsz1RzGWzUrWD8XTFLf2RuvvzhgBViuuWI0ns3WQM\npa6Dcu+nWfGLCl26WlphNmoUAv8wCay7KoynG58pJW+uqYA7lTx4tNMLhIW7rM4r\noYbXctkCi03PcW3x25O8yKSzYIi5xH7CQ7ggwXzcx4r06NXkaE9/LHuBSFJcIMvH\n8QIDAQAB\n-----END PUBLIC KEY-----\n",

"rsa_bits": 2048

},

"sensitive_attributes": []

}

]

}

],

"check_results": null

}

可以看到不应该被第三方知晓的 private_key_openssh、private_key_pem 和 private_key_pem_pkcs8 是以明文形式被写在 tfstate 文件里的。这是 Terraform 从设计之初就确定的,并且在可见的未来不会有改善。不论你是在代码中明文硬编码,还是使用参数(variable,我们之后的章节会介绍),亦或是妙想天开地使用函数在运行时从外界读取,都无法改变这个结果。

解决之道有两种,一种是使用 Vault 或是 AWS Secret Manager 这样的动态机密管理工具生成临时有效的动态机密(比如有效期只有 5 分钟,即使被他人读取到,机密也早已失效);另一种就是我们下面将要介绍的 —— Terraform Backend。

1.3.2.1.3. 生产环境的 tfstate 管理方案—— Backend

到目前为止我们的 tfstate 文件是保存在当前工作目录下的本地文件,假设我们的计算机损坏了,导致文件丢失,那么 tfstate 文件所对应的资源都将无法管理,而产生资源泄漏。

另外如果我们是一个团队在使用 Terraform 管理一组资源,团队成员之间要如何共享这个状态文件?能不能把 tfstate 文件签入源代码管理工具进行保存?

把 tfstate 文件签入管代码管理工具是非常错误的,这就好比把数据库签入了源代码管理工具,如果两个人同时签出了同一份 tfstate,并且对代码做了不同的修改,又同时 apply 了,这时想要把 tfstate 签入源码管理系统可能会遭遇到无法解决的冲突。况且如果代码仓库是公开的,那么保存在 State 中的明文机密就会泄露。

为了解决状态文件的存储和共享问题,Terraform 引入了远程状态存储机制,也就是 Backend。Backend 是一种抽象的远程存储接口,如同 Provider 一样,Backend 也支持多种不同的远程存储服务:

localremoteazurermconsulcosgcshttpkubernetesosspgs3

注意:在 Terraform 1.1.0 之前的版本中,Backend 分为标准和增强两种。增强是一种特殊的 remote Backend,它既可以存储状态,也可以执行 Terraform 操作。但是这种分类已被移除。请参考使用 Terraform Cloud 了解关于存储状态、执行远程操作以及直接从 Terraform 调用 Terraform Cloud 的详细信息。

状态锁是指,当针对一个 tfstate 进行变更操作时,可以针对该状态文件添加一把全局锁,确保同一时间只能有一个变更被执行。不同的 Backend 对状态锁的支持不尽相同,实现状态锁的机制也不尽相同,例如 consul Backend就通过一个 .lock 节点来充当锁,一个 .lockinfo 节点来描述锁对应的会话信息,tfstate 文件被保存在 Backend 定义的路径节点内;s3 Backend 则需要用户传入一个 Dynamodb 表来存放锁信息,而 tfstate 文件被存储在 S3 存储桶里,等等等等。读者可以根据实际情况,挑选自己合适的 Backend。接下来我将以 consul 为范例为读者演示 Backend 机制。

1.3.2.1.4. Consul简介以及安装

Consul 是 HashiCorp 推出的一个开源工具,主要用来解决服务发现、配置中心以及 Service Mesh 等问题;Consul 本身也提供了类似 ZooKeeper、Etcd 这样的分布式键值存储服务,具有基于 Gossip 协议的最终一致性,所以可以被用来充当 Terraform Backend 存储。

安装 Consul 十分简单,如果你是 Ubuntu 用户:

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install consul

对于CentOS用户:

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://rpm.releases.hashicorp.com/RHEL/hashicorp.repo

sudo yum -y install consul

对于Macos用户:

brew tap hashicorp/tap

brew install hashicorp/tap/consul

对于 Windows 用户,如果按照前文安装 Terraform 教程已经配置了 Chocolatey 的话:

choco install consul

安装完成后的验证:

$ consul

Usage: consul [--version] [--help] <command> [<args>]

Available commands are:

acl Interact with Consul's ACLs

agent Runs a Consul agent

catalog Interact with the catalog

config Interact with Consul's Centralized Configurations

connect Interact with Consul Connect

debug Records a debugging archive for operators

event Fire a new event

exec Executes a command on Consul nodes

force-leave Forces a member of the cluster to enter the "left" state

info Provides debugging information for operators.

intention Interact with Connect service intentions

join Tell Consul agent to join cluster

keygen Generates a new encryption key

keyring Manages gossip layer encryption keys

kv Interact with the key-value store

leave Gracefully leaves the Consul cluster and shuts down

lock Execute a command holding a lock

login Login to Consul using an auth method

logout Destroy a Consul token created with login

maint Controls node or service maintenance mode

members Lists the members of a Consul cluster

monitor Stream logs from a Consul agent

operator Provides cluster-level tools for Consul operators

peering Create and manage peering connections between Consul clusters

reload Triggers the agent to reload configuration files

resource Interact with Consul's resources

rtt Estimates network round trip time between nodes

services Interact with services

snapshot Saves, restores and inspects snapshots of Consul server state

tls Builtin helpers for creating CAs and certificates

troubleshoot CLI tools for troubleshooting Consul service mesh

validate Validate config files/directories

version Prints the Consul version

watch Watch for changes in Consul

安装完 Consul 后,我们可以启动一个测试版 Consul 服务:

$ consul agent -dev

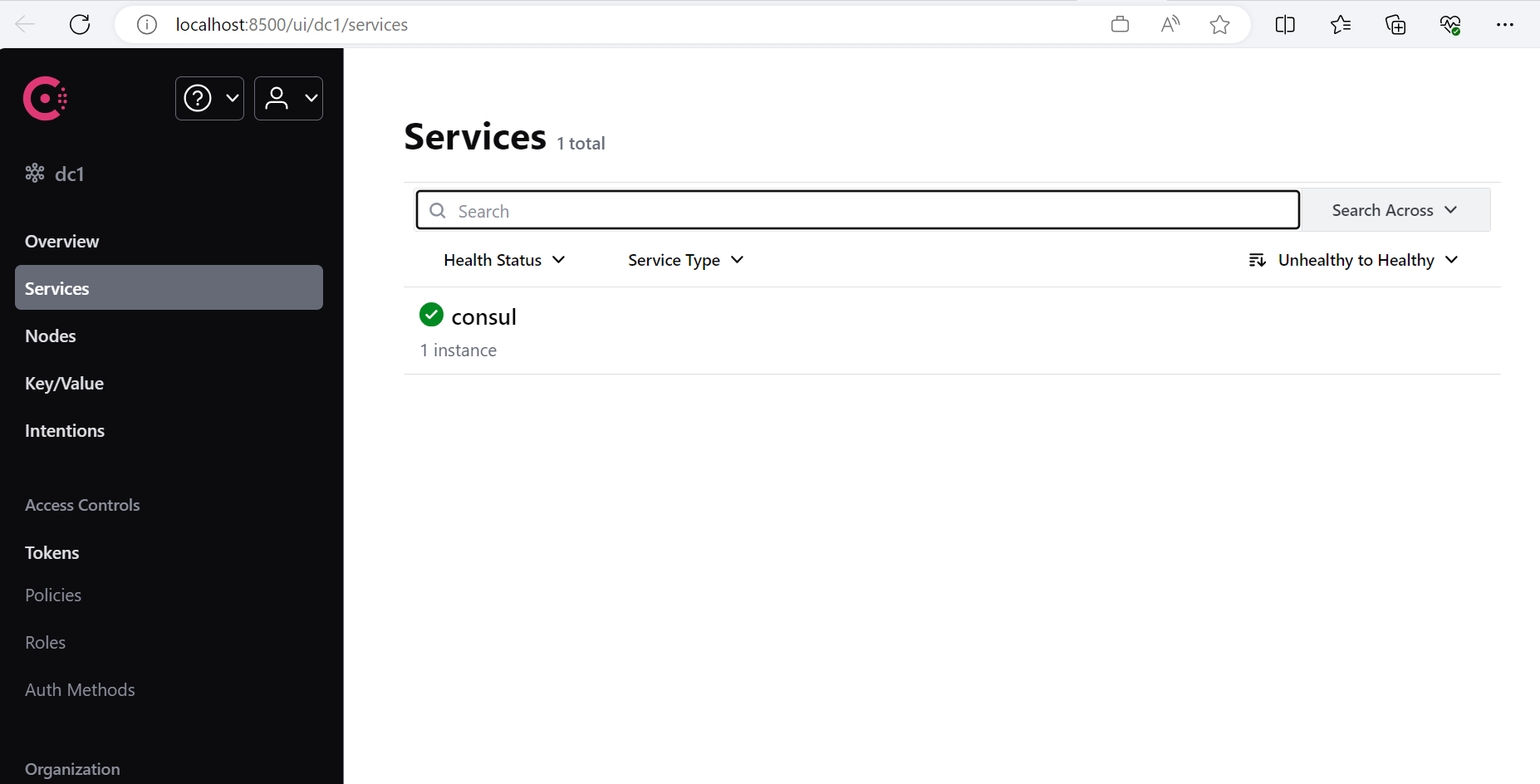

Consul 会在本机 8500 端口开放 Http 终结点,我们可以通过浏览器访问 http://localhost:8500 :

1.3.2.1.5. 使用 Backend

我们还是利用 LocalStack 来执行一段简单的 Terraform 代码:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~>5.0"

}

}

backend "consul" {

address = "localhost:8500"

scheme = "http"

path = "localstack-aws"

}

}

provider "aws" {

access_key = "test"

secret_key = "test"

region = "us-east-1"

s3_use_path_style = false

skip_credentials_validation = true

skip_metadata_api_check = true

skip_requesting_account_id = true

endpoints {

apigateway = "http://localhost:4566"

apigatewayv2 = "http://localhost:4566"

cloudformation = "http://localhost:4566"

cloudwatch = "http://localhost:4566"

docdb = "http://localhost:4566"

dynamodb = "http://localhost:4566"

ec2 = "http://localhost:4566"

es = "http://localhost:4566"

elasticache = "http://localhost:4566"

firehose = "http://localhost:4566"

iam = "http://localhost:4566"

kinesis = "http://localhost:4566"

lambda = "http://localhost:4566"

rds = "http://localhost:4566"

redshift = "http://localhost:4566"

route53 = "http://localhost:4566"

s3 = "http://s3.localhost.localstack.cloud:4566"

secretsmanager = "http://localhost:4566"

ses = "http://localhost:4566"

sns = "http://localhost:4566"

sqs = "http://localhost:4566"

ssm = "http://localhost:4566"

stepfunctions = "http://localhost:4566"

sts = "http://localhost:4566"

}

}

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-trusty-14.04-amd64-server-20170727"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["099720109477"] # Canonical

}

resource "aws_instance" "web" {

ami = data.aws_ami.ubuntu.id

instance_type = "t3.micro"

tags = {

Name = "HelloWorld"

}

}

在 terraform 节中,我们添加了 backend 配置节,指定使用 localhost:8500 为地址(也就是我们刚才启动的测试版 Consul 服务),指定使用 http 协议访问该地址,指定 tfstate 文件存放在 Consul 键值存储服务的 localstack-aws 路径下。

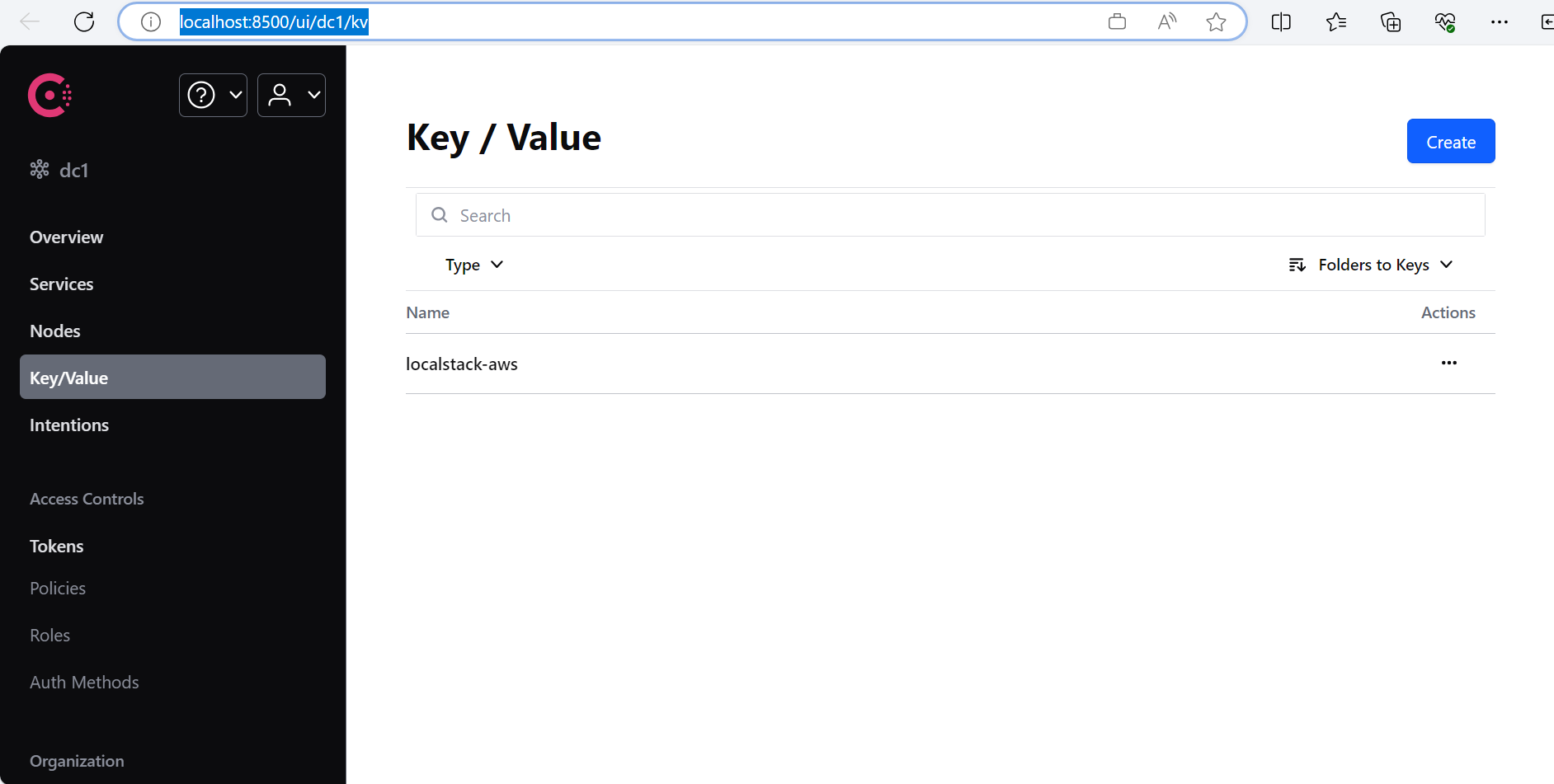

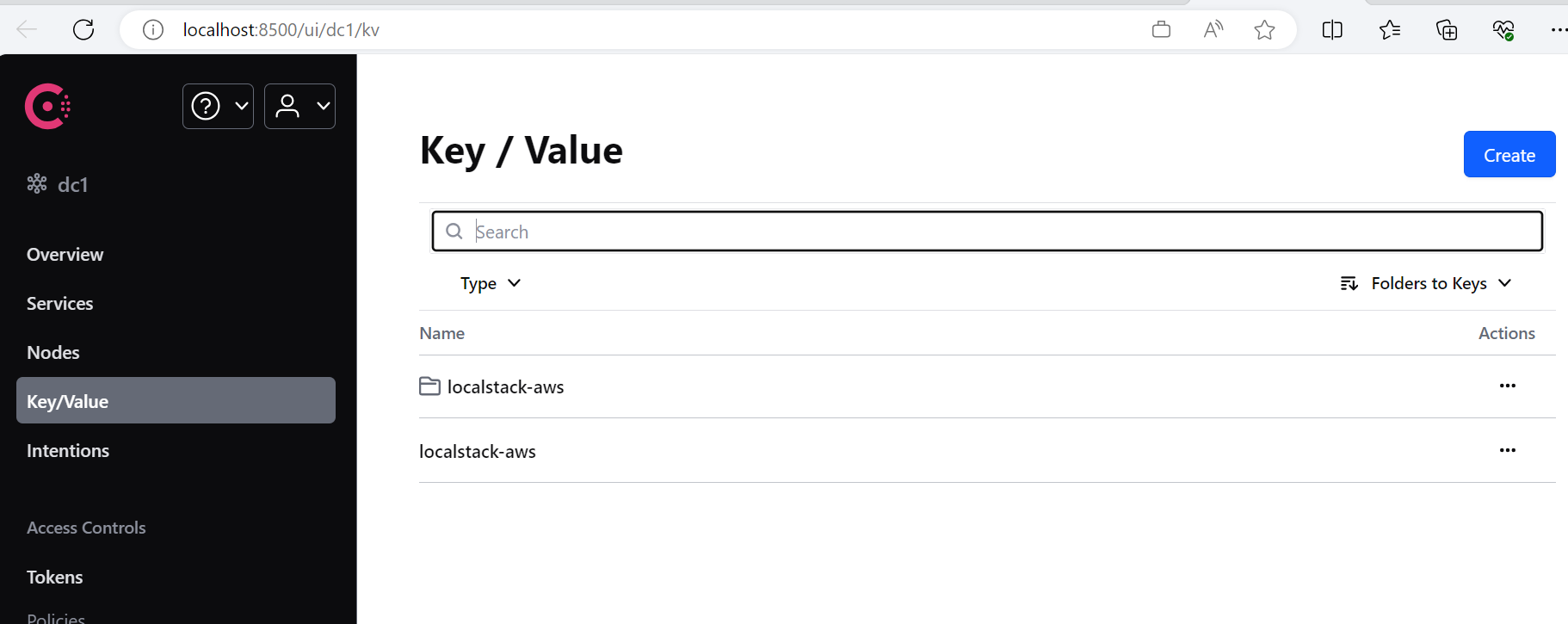

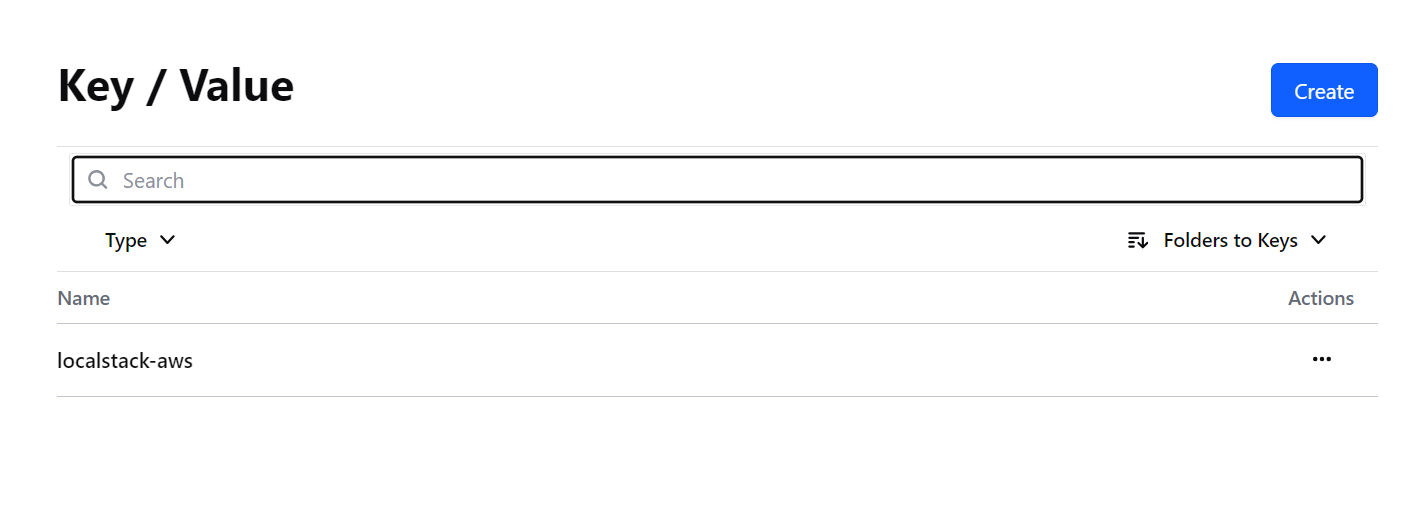

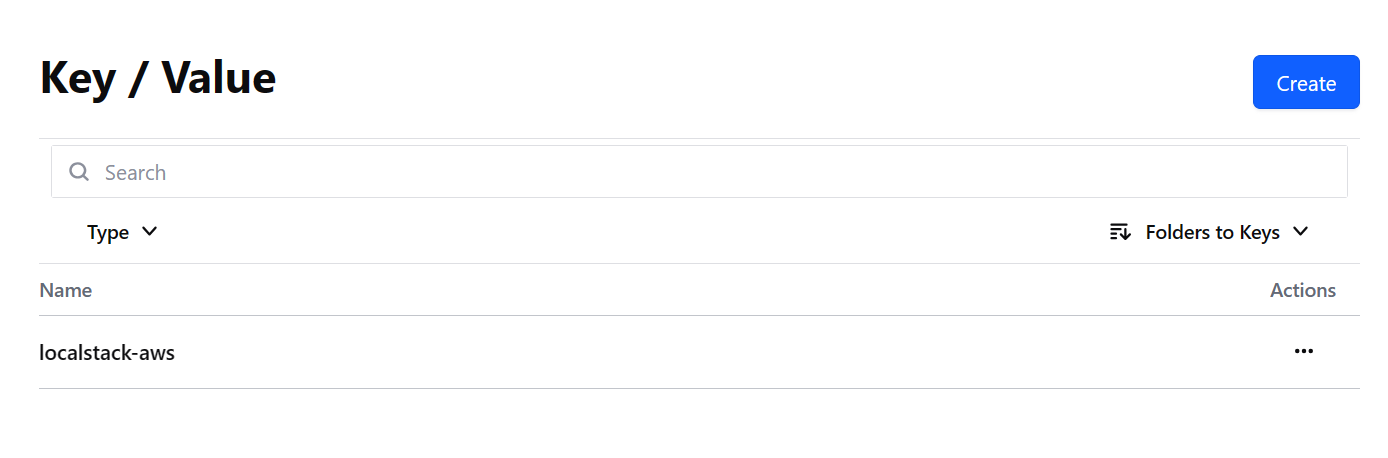

当我们执行完 terraform apply 后,我们访问 http://localhost:8500/ui/dc1/kv :

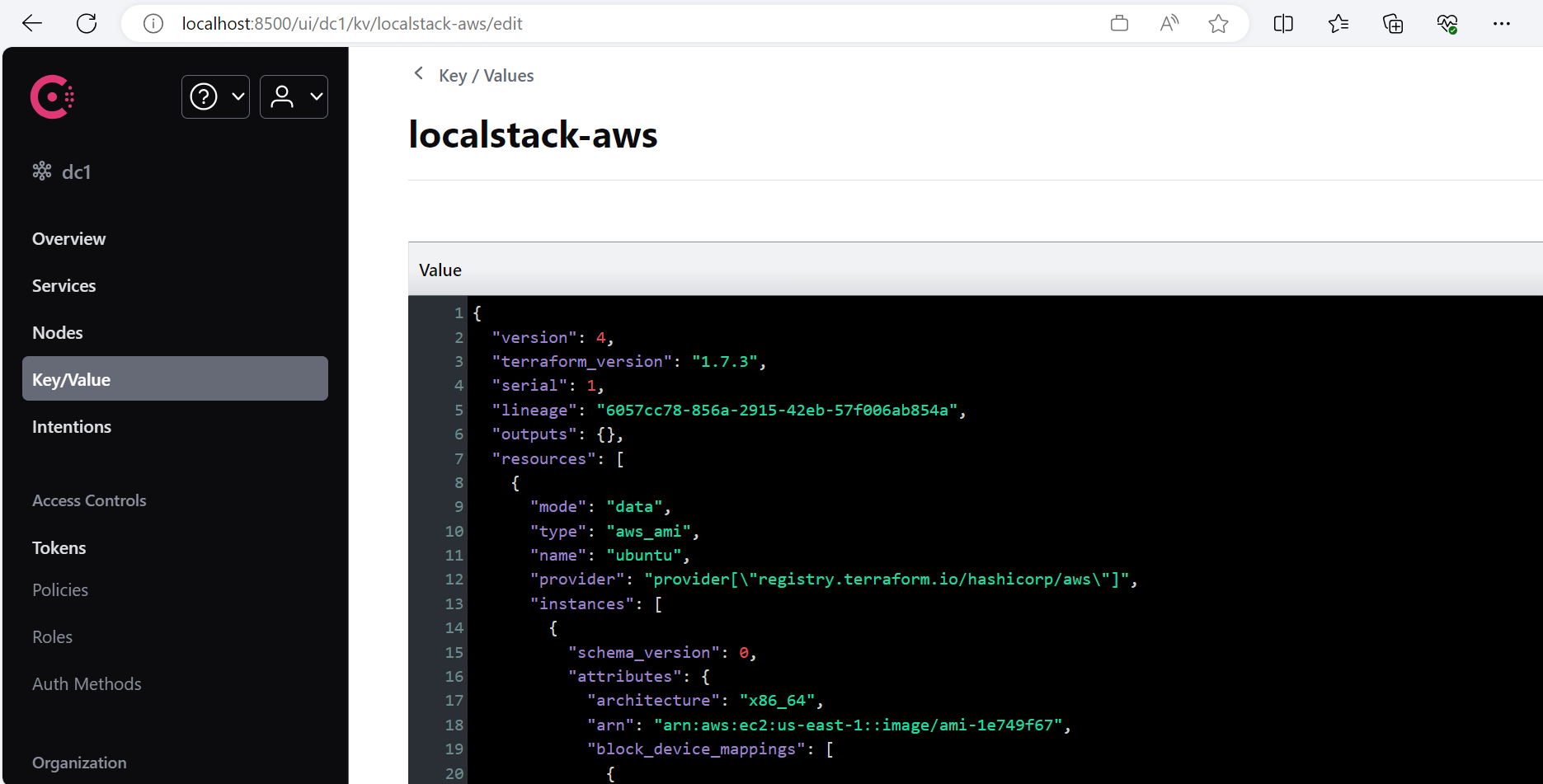

可以看到 localstack-aws,点击进入:

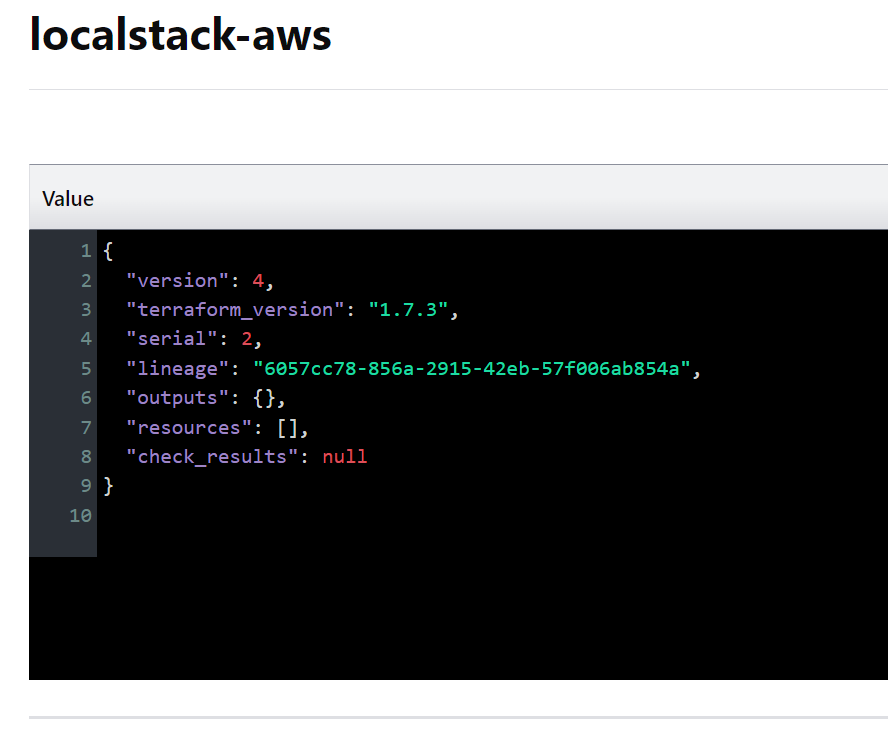

可以看到,原本保存在工作目录下的 tfstate 文件的内容,被保存在了 Consul 的名为 localstack-aws 的键下。

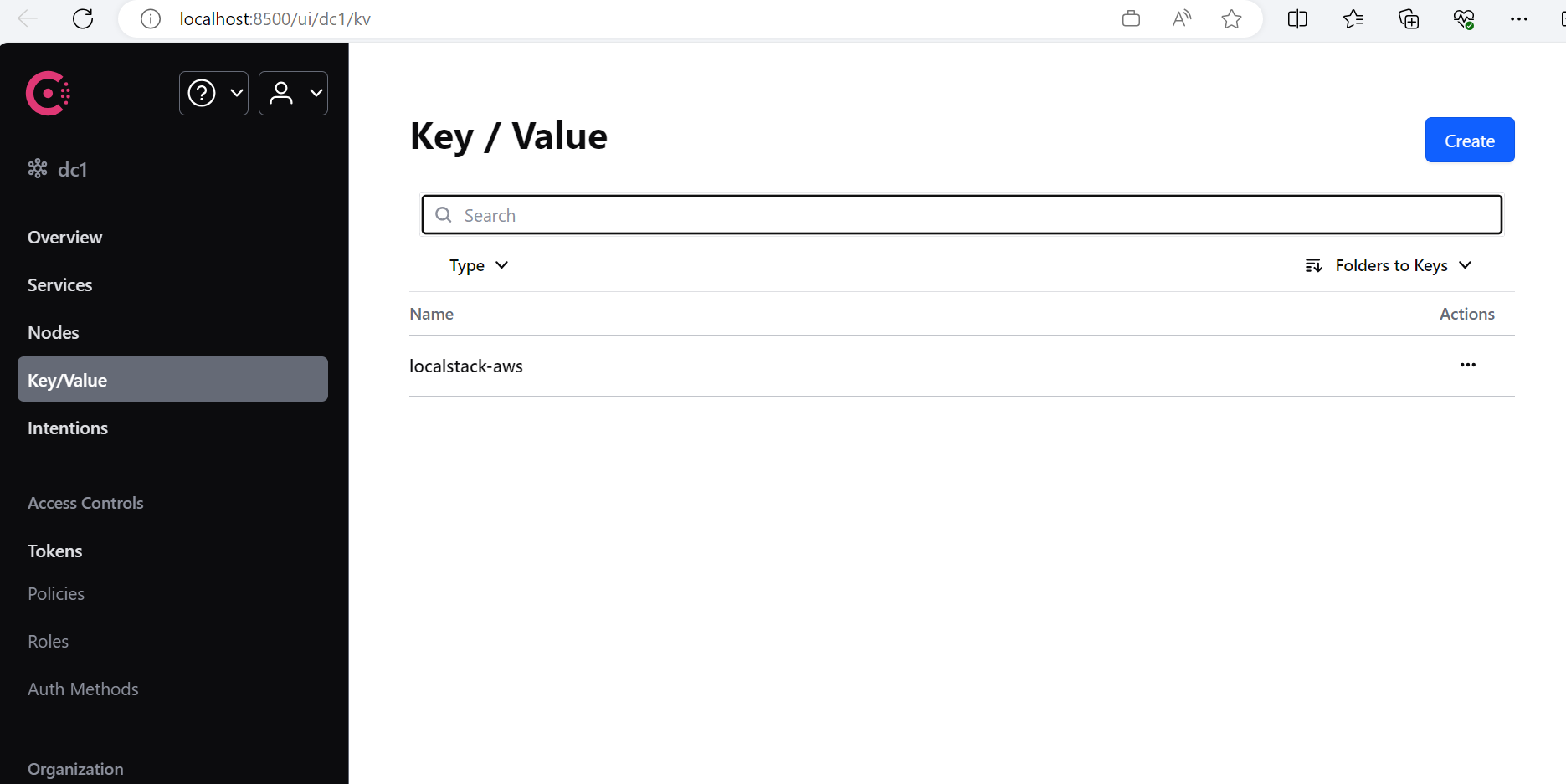

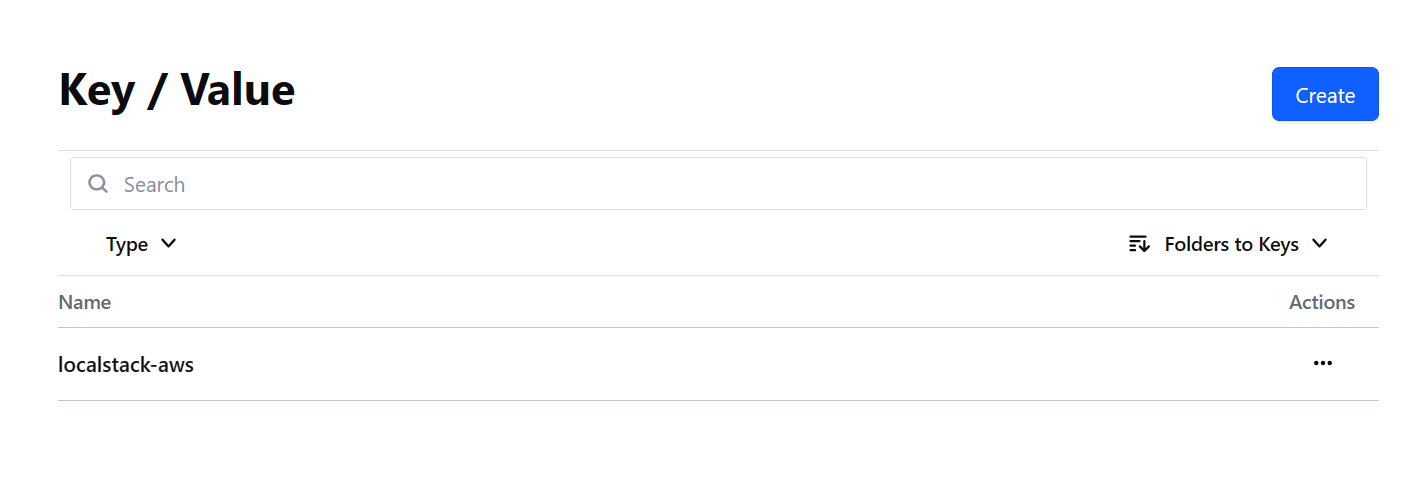

让我们执行 terraform destroy 后,重新访问 http://localhost:8500/ui/dc1/kv :

可以看到,localstack-aws 这个键仍然存在。让我们点击进去:

可以看到,它的内容为空,代表基础设施已经被成功销毁。

1.3.2.1.6. 观察锁文件

那么在这个过程里,锁究竟在哪里?我们如何能够体验到锁的存在?让我们对代码进行一点修改:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~>5.0"

}

}

backend "consul" {

address = "localhost:8500"

scheme = "http"

path = "localstack-aws"

}

}

provider "aws" {

access_key = "test"

secret_key = "test"

region = "us-east-1"

s3_use_path_style = false

skip_credentials_validation = true

skip_metadata_api_check = true

skip_requesting_account_id = true

endpoints {

apigateway = "http://localhost:4566"

apigatewayv2 = "http://localhost:4566"

cloudformation = "http://localhost:4566"

cloudwatch = "http://localhost:4566"

docdb = "http://localhost:4566"

dynamodb = "http://localhost:4566"

ec2 = "http://localhost:4566"

es = "http://localhost:4566"

elasticache = "http://localhost:4566"

firehose = "http://localhost:4566"

iam = "http://localhost:4566"

kinesis = "http://localhost:4566"

lambda = "http://localhost:4566"

rds = "http://localhost:4566"

redshift = "http://localhost:4566"

route53 = "http://localhost:4566"

s3 = "http://s3.localhost.localstack.cloud:4566"

secretsmanager = "http://localhost:4566"

ses = "http://localhost:4566"

sns = "http://localhost:4566"

sqs = "http://localhost:4566"

ssm = "http://localhost:4566"

stepfunctions = "http://localhost:4566"

sts = "http://localhost:4566"

}

}

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-trusty-14.04-amd64-server-20170727"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["099720109477"] # Canonical

}

resource "aws_instance" "web" {

ami = data.aws_ami.ubuntu.id

instance_type = "t3.micro"

tags = {

Name = "HelloWorld"

}

provisioner "local-exec" {

command = "sleep 1000"

}

}

这次的变化是我们在 aws_instance 的定义上添加了一个 local-exec 类型的 Provisioner。Provisioner 我们在后续的章节中会专门叙述,在这里读者只需要理解,Terraform 进程在成功创建了该资源后,会在执行 Terraform 命令行的机器上执行一条命令:sleep 1000,这个时间足以将 Terraform 进程阻塞足够长的时间,以便让我们观察锁信息了。如果读者正在使用 Windows,可以把 provisioner 改成这样:

provisioner "local-exec" {

command = "Start-Sleep -s 1000"

interpreter = ["PowerShell", "-Command"]

}

让我们执行terraform apply,这一次 apply 将会被 sleep 阻塞,而不会成功完成:

data.aws_ami.ubuntu: Reading...

data.aws_ami.ubuntu: Read complete after 1s [id=ami-1e749f67]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following

symbols:

+ create

Terraform will perform the following actions:

# aws_instance.web will be created

+ resource "aws_instance" "web" {

+ ami = "ami-1e749f67"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ disable_api_stop = (known after apply)

+ disable_api_termination = (known after apply)

+ ebs_optimized = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ host_resource_group_arn = (known after apply)

+ iam_instance_profile = (known after apply)

+ id = (known after apply)

+ instance_initiated_shutdown_behavior = (known after apply)

+ instance_lifecycle = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t3.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = (known after apply)

+ monitoring = (known after apply)

+ outpost_arn = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ placement_partition_number = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ spot_instance_request_id = (known after apply)

+ subnet_id = (known after apply)

+ tags = {

+ "Name" = "HelloWorld"

}

+ tags_all = {

+ "Name" = "HelloWorld"

}

+ tenancy = (known after apply)

+ user_data = (known after apply)

+ user_data_base64 = (known after apply)

+ user_data_replace_on_change = false

+ vpc_security_group_ids = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

aws_instance.web: Creating...

aws_instance.web: Still creating... [10s elapsed]

aws_instance.web: Provisioning with 'local-exec'...

aws_instance.web (local-exec): Executing: ["PowerShell" "-Command" "Start-Sleep -s 1000"]

aws_instance.web: Still creating... [20s elapsed]

...

让我们重新访问 http://localhost:8500/ui/dc1/kv :

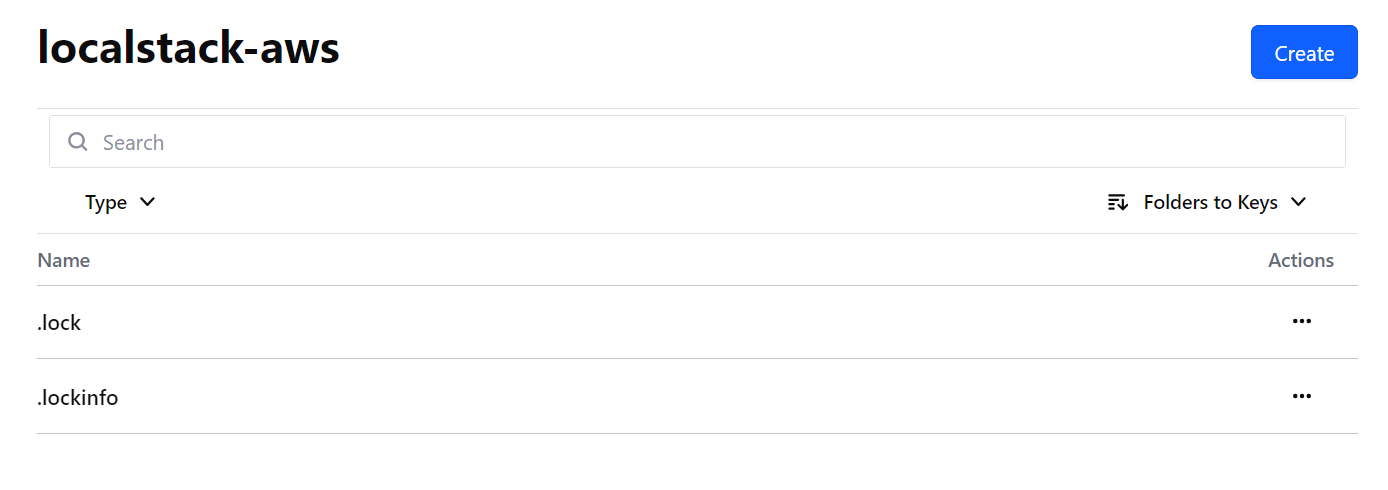

这一次情况发生了变化,我们看到除了localstack-aws这个键之外,还多了一个同名的文件夹。让我们点击进入文件夹:

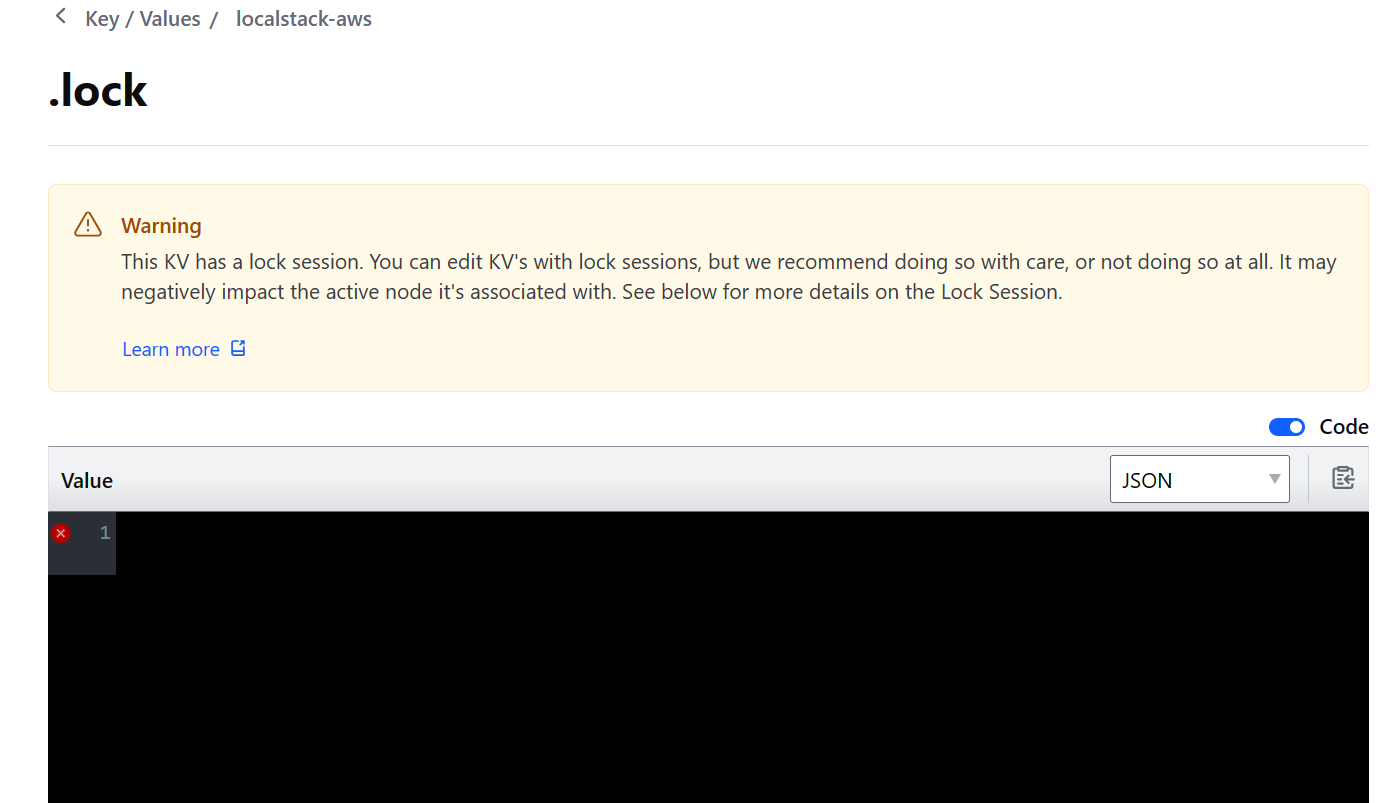

在这里我们成功观测到了 .lock 和 .lockinfo 文件。让我们点击 .lock 看看:

Consul UI提醒我们,该键值对目前正被锁定,而它的内容是空的。让我们查看 .lockinfo 的内容:

.lockinfo 里记录了锁 ID、我们执行的操作,以及其他的一些信息。

让我们另起一个新的命令行窗口,在同一个工作目录下尝试另一次执行 terraform apply:

$ terraform apply

Acquiring state lock. This may take a few moments...

╷

│ Error: Error acquiring the state lock

│

│ Error message: Lock Info:

│ ID: 11c859fd-d3e5-4eab-46d6-586b73133430

│ Path: localstack-aws

│ Operation: OperationTypeApply

│ Who: ***

│ Version: 1.7.3

│ Created: 2024-02-25 02:00:21.3700184 +0000 UTC

│ Info: consul session: 11c859fd-d3e5-4eab-46d6-586b73133430

│

│

│ Terraform acquires a state lock to protect the state from being written

│ by multiple users at the same time. Please resolve the issue above and try

│ again. For most commands, you can disable locking with the "-lock=false"

│ flag, but this is not recommended.

╵

可以看到,同时另一个人试图对同一个 tfstate 执行变更的尝试失败了,因为它无法顺利获取到锁。

让我们用 ctrl-c 终止原先被阻塞的 terraform apply 的执行,然后用 terraform force-unlock 11c859fd-d3e5-4eab-46d6-586b73133430 解锁:

$ terraform force-unlock 11c859fd-d3e5-4eab-46d6-586b73133430

Do you really want to force-unlock?

Terraform will remove the lock on the remote state.

This will allow local Terraform commands to modify this state, even though it

may still be in use. Only 'yes' will be accepted to confirm.

Enter a value: yes

Terraform state has been successfully unlocked!

The state has been unlocked, and Terraform commands should now be able to

obtain a new lock on the remote state.

然后重新访问 http://localhost:8500/ui/dc1/kv :

可以看到,包含锁的文件夹消失了。

1.3.2.1.7. Backend 配置的动态赋值

有些读者会注意到,到目前为止我所写的代码里的配置项基本都是硬编码的,Terraform 是否支持运行时用变量动态赋值?答案是支持的,Terraform 可以通过 variable 变量来传值给 provider、data 和 resource。

但有一些例外,其中就有 backend 配置。backend 配置只允许硬编码,或者不传值。

这个问题是因为 Terraform 运行时本身设计的运行顺序导致的,一直到 2019 年 05 月官方才给出了解决方案,那就是“部分配置“(partial configuration)。

简单来说就是我们可以在 Terraform 代码的 backend 声明中不给出具体的配置:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~>5.0"

}

}

backend "consul" {

}

}

而在另一个独立的文件中给出相关配置,例如我们在工作目录下创建一个名为 backend.hcl 的文件:

address = "localhost:8500"

scheme = "http"

path = "localstack-aws"

本质上我们就是把原本属于 backend consul 块的属性赋值代码搬迁到一个独立的 hcl 文件内,然后我们执行 terraform init 时附加 backend-config 参数:

$ terraform init -backend-config=backend.hcl

这样也可以初始化成功。通过这种打补丁的方式,我们可以复用他人预先写好的 Terraform 代码,在执行时把属于我们自己的 Backend 配置信息以独立的 backend-config 文件的形式传入来进行初始化。

1.3.2.1.8. Backend 的权限控制以及版本控制

Backend 本身并没有设计任何的权限以及版本控制,这方面完全依赖于具体的 Backend 实现。以 AWS S3 为例,我们可以针对不同的 Bucket 设置不同的 IAM,用以防止开发测试人员直接操作生产环境,或是给予部分人员对状态信息的只读权限;另外我们也可以开启 S3 的版本控制功能,以防我们错误修改了状态文件(Terraform 命令行有修改状态的相关指令)。

1.3.2.1.9. 状态的隔离存储

我们讲完 Backend,现在要讨论另一个问题。假设我们的 Terraform 代码可以创建一个通用的基础设施,比如说是云端的一个 EKS、AKS 集群,或者是一个基于 S3 的静态网站,那么我们可能要为很多团队创建并维护这些相似但要彼此隔离的 Stack,又或者我们要为部署的应用维护开发、测试、预发布、生产四套不同的部署。那么该如何做到不同的部署,彼此状态文件隔离存储和管理呢?

一种简单的方法就是分成不同的文件夹存储。

我们可以把不同产品不同部门使用的基础设施分成不同的文件夹,在文件夹内维护相同的代码文件,配置不同的 backend-config,把状态文件保存到不同的 Backend 上。这种方法可以给予最大程度的隔离,缺点是我们需要拷贝许多份相同的代码。

第二种更加轻量级的方法就是 Workspace。注意,Terraform 开源版的 Workspace 与 Terraform Cloud 云服务的 Workspace 实际上是两个不同的概念,我们这里介绍的是开源版的 Workspace。

Workspace 允许我们在同一个文件夹内,使用同样的 Backend 配置,但可以维护任意多个彼此隔离的状态文件。还是我们刚才那个使用测试 Consul 服务作为 Backend 的例子:

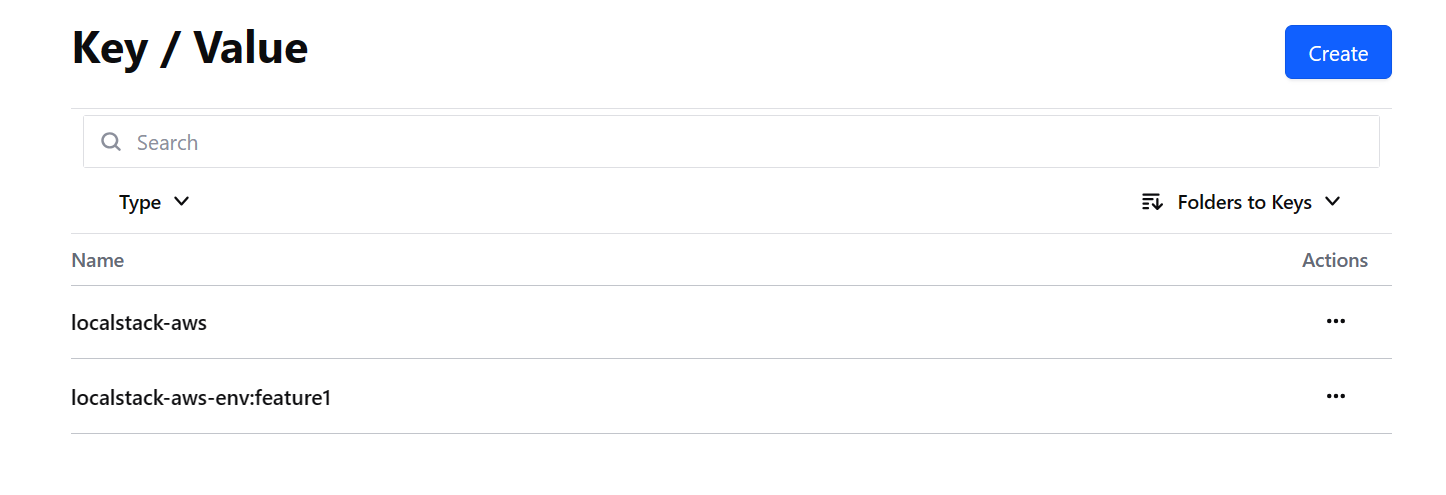

当前我们有一个状态文件,名字是 localstack-aws。然后我们在工作目录下执行这样的命令:

$ terraform workspace new feature1

Created and switched to workspace "feature1"!

You're now on a new, empty workspace. Workspaces isolate their state,

so if you run "terraform plan" Terraform will not see any existing state

for this configuration.

通过调用 workspace 命令,我们成功创建了名为 feature1 的 Workspace。这时我们观察 .terraform 文件夹:

..

├── environment

├── providers

│ └── registry.terraform.io

│ └── hashicorp

│ └── aws

│ └── 5.38.0

......

我们会发现多了一个 environment 文件,它的内容是 feature1。这实际上就是 Terraform 用来保存当前上下文环境使用的是哪个 Workspace 的文件。

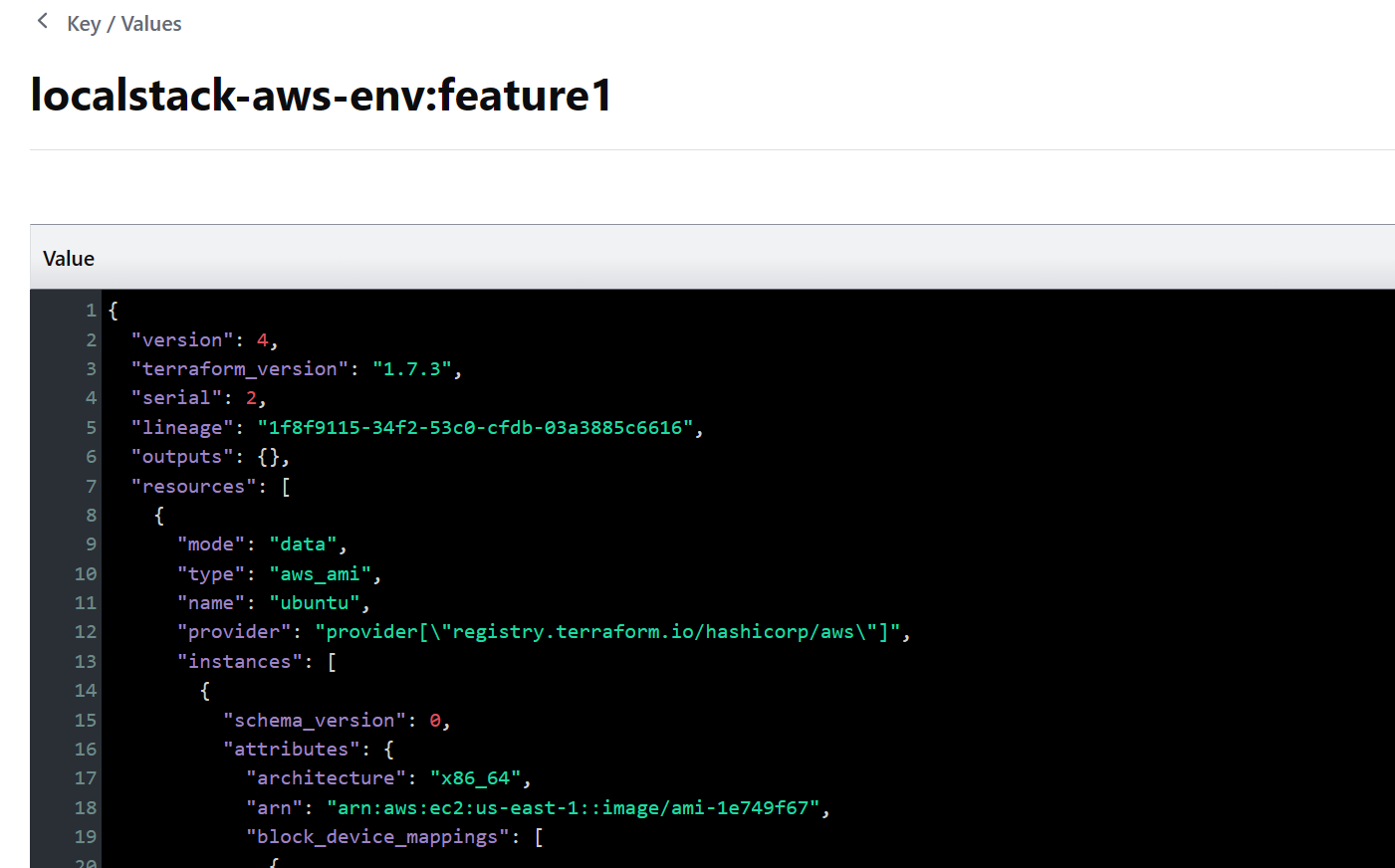

重新观察 Consul 存储会发现多了一个文件:localstack-aws-env:feature1。这就是 Terraform 为 feature1 这个 Workspace 创建的独立的状态文件。让我们执行一下 apply,然后再看这个文件的内容:

可以看到,状态被成功写入了 feature1 的状态文件。

我们可以通过以下命令来查询当前 Backend 下所有的 Workspace:

$ terraform workspace list

default

* feature1

我们有 default 和 feature1 两个 Workspace,当前我们工作在 feature1 上。我们可以用以下命令切换回 default:

$ terraform workspace select default

Switched to workspace "default".

我们可以用以下命令确认我们成功切换回了 default:

$ terraform workspace show

default

我们可以用以下命令删除 feature1:

$ terraform workspace delete feature1

╷

│ Error: Workspace is not empty

│

│ Workspace "feature1" is currently tracking the following resource instances:

│ - aws_instance.web

│

│ Deleting this workspace would cause Terraform to lose track of any associated remote objects, which would then require you to

│ delete them manually outside of Terraform. You should destroy these objects with Terraform before deleting the workspace.

│

│ If you want to delete this workspace anyway, and have Terraform forget about these managed objects, use the -force option to

│ disable this safety check.

╵

Terraform 发现 feature1 还有资源没有被销毁,所以它拒绝了我们的删除请求。因为我们目前是使用 LocalStack 模拟的例子,所以不会有资源泄漏的问题,我们可以用以下命令强制删除 feature1:

$ terraform workspace delete -force feature1

Deleted workspace "feature1"!

WARNING: "feature1" was non-empty.

The resources managed by the deleted workspace may still exist,

but are no longer manageable by Terraform since the state has

been deleted.

再观察 Consul 存储,就会发现 feature1 的状态文件被删除了:

目前支持多工作区的 Backend 有:

- AzureRM

- Consul

- COS

- GCS

- Kubernetes

- Local

- OSS

- Postgres

- Remote

- S3

1.3.2.1.10. 该使用哪种隔离

相比起多文件夹隔离的方式来说,基于 Workspace 的隔离更加简单,只需要保存一份代码,在代码中不需要为 Workspace 编写额外代码,用命令行就可以在不同工作区之间来回切换。

但是 Workspace 的缺点也同样明显,由于所有工作区的 Backend 配置是一样的,所以有权读写某一个 Workspace 的人可以读取同一个 Backend 路径下所有其他 Workspace;另外 Workspace 是隐式配置的(调用命令行),所以有时人们会忘记自己工作在哪个 Workspace 下。

Terraform 官方为 Workspace 设计的场景是:有时开发人员想要对既有的基础设施做一些变更,并进行一些测试,但又不想直接冒险修改既有的环境。这时他可以利用 Workspace 复制出一个与既有环境完全一致的平行环境,在这个平行环境里做一些变更,并进行测试和实验工作。

Workspace 对应的源代码管理模型里的主干——分支模型,如果团队希望维护的是不同产品之间不同的基础设施,或是开发、测试、预发布、生产环境,那么最好还是使用不同的文件夹以及不同的 backend-config 进行管理。